Some design patterns are used all the time and their names are known to all - like facade, factories and proxies. Some design patterns are more popular than they should be. But some, although rarely mentioned by their name, have been recently "rediscovered". I'm talking about Flyweight and Memento.

Flyweight

This one basically lets us share n instances between m concurrent clients when m > n. It separates "intrinistic state", the normal class members, and "extrinistic state", which is maintained via parameter passing and return values. Make your intrinistic state immutable, and you can share the same instance between multiple clients. Cool, ha? Priceless. Look at message passing concurrency instead of shared memory concurrency, REST, transactionless architecture... All these treasures actually follow the same spirit as Flyweight.

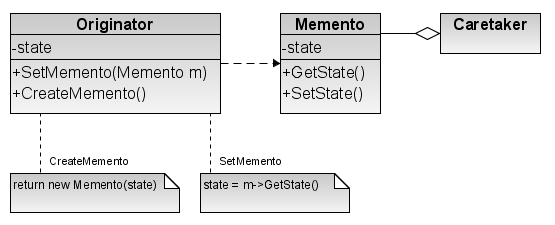

Memento

This pattern suggests that if you want to save your object state, you better export it in a new dedicated memento object and store the memento. Then restore your object from the memento. This is like serialization, only serialization didn't follow the pattern, unfortunately.

This is like serialization, only serialization didn't follow the pattern, unfortunately.

Josh Bloch suggests we do it manually with so called "Serialization Proxies" - see item 78 in chapter 11 of 2nd edition of "Effective Java". Here's a slide from JavaOne 2006 preso:

Now imagine persistence architectures actually using intermediate memento objects... instead of modifying actual objects bytecode, breaking encapsulation with access to their private fields, imposing constraints like public empty constructors and so on... Maybe we would have been better off with mementos...?

Friday, January 9, 2009

The return of forgotten design patterns

Posted by

Yardena

at

5:35 PM

0

comments

![]()

Labels: java, software engineering

Static types are from Mars, Dynamic types are from Venus

static - associated with logic and acting by the rules, strong, efficient, usually responsible for safety and order enforcement; but non-compromising (for better or worse), non-adaptive, weak in communication skills.

dynamic - associated with beauty and elegance, light, good in communication skills, can usually be easily made to do what you want them to, change all the time and adapt to change well; but unpredictable, act on intuition rather than logic, often seen as less efficient and weaker.

Posted by

Yardena

at

1:22 PM

0

comments

![]()

Labels: software engineering

Wednesday, January 7, 2009

Types and components

Just some thoughts following a recent conversation I had. Don't we always want static types? If we can detect errors in our program, why not do it as early as possible? Sure. So when is "as early as possible"? I think the answer depends on what our program is - is it one monolithic piece or a component?

In the past monolithic software was prevalent, but today I would bet that most software is meant to be a component. Just look at open-source as it used to be before Java - you'd usually download the C source-code, change it if you need, build it all on your machine, and create one big executable. (And BTW - congratulations, you are a geek.) Cross platform Java changed the situation. Now open-source projects give you a jar to download, you put it on your classpath and you are ready to go. This lowered the bar for open-source adoption, and contributed (aside less restrictive licenses and other factors) to baby-boom of open-source frameworks and tools. Nowadays a Java developer cannot even imagine having no 3rd party jars in the classpath!

Static type check validates our software component against other components in the compilation environment. Does it match the runtime environment? What about different configurations of the runtime environment - there are tests and real deployments, and various types of deployments, and any given installation environment can change over time - new components being added, other updated or removed? How do we guarantee that compile time checks still hold? The short answer is - we can't. We need dynamic type safety anyway. Now let's examine the added value and the price (yes, there is one!) of deeply static types.

On code organization level, we try to reduce the dependencies to bare minimum - hide classes behind interfaces that we hope will remain more stable. The problem is that number of interfaces and factories in our application grows, while pursuing modularity we sacrifice simplicity... So maybe the problem is in the name? Some go as far as add support for structural types, minimizing dependency to a single field/method signature (not a problem-free solution, but there are interesting refinements). All this may help, but doesn't really solve the problem.

Another aspect we need to deal with is building and packaging the software. Here we enter the world of dependency management, the world of "make", Ant, Maven, repositories, jar versions; if it's a large enough and complex enough software we work on, simply speaking - we enter the world of pain. I still find it strange that we haven't found a better way.

As for application deployment and its problems, we'll get back to it later. But the truth is that no matter how hard we try, we can't guarantee there will be no errors when we deploy our software, so ... JVM doesn't trust us and gives us verification.

Simply put, when class is compiled, some of its requirements from other classes are captured and encoded into the bytecode. Then JVM would check them when the class is loaded, and reject the class if they can't be met. (This is really an over-simplified description of a complex algorithm, which also takes time to execute, despite optimization efforts on JVM side.) So this isn't really a dynamic check, it's something in between - names in our class get linked when it is loaded. In the classic Java SE class-loading scheme, where components are basically a chain, this scheme should work. But if we want real components, ones we can add, override, replace or remove while program is running - sweet turns sour. Our interfaces and factories have names, and classes that represent them need to reside in some "common vocabulary" usually loaded by the parent classloader, because it's not only the class bytecode that matters, but also who loaded what. Since we are talking actual classes, not their names, once we loaded two components, they cannot change their protocol of communication without reloading their parent, they also can't use a different version of a sub-component that the parent component has referenced.

In JEE that sort of things is necessary, that's why classloading in JEE is a terrible mess, not only it does not follow any specification, but it is different in almost each and every app server (wasn't there supposed to be portability?!) If you ever used commons-logging in a JEE app, you probably know what I mean. Maybe it got fixed lately, I don't know, but the Tech Guide for commons-logging is an ode to classloader frustration.

Back to deployment: whenever there are some sort of dynamic components - JEE, Spring or OSGi, there is always reflection. And most of the time there's lots of XML too. It's an escape route from static types. I attended Alef Arendsen's session at JavaEdge that presented OSGi and SpringSource. I carefully watched Alef juggle between XML, source and console like a child who watches a circus magician trying to uncover his tricks. But I didn't quite figure out the magic. And that was a whole session just for HelloWorld. I know Spring folks are doing best they can, and they're smart and all... but comparing to Smalltalk, I wasn't quite impressed. As for other solutions, although I haven't tried this out, there's Guice/OSGi integration without XML and with dynamic proxies and on-the-fly bytecode generation with ASM, but there's some overhead for the user, because it requires intermediate objects for services. So this way or the other, looks like JVM platform is holding us back.

Verification is addition, not replacement of dynamic checks. So what we get is basically a triple check of correctness (javac, verifier, dynamic) but loss of flexibility - we are interfering with components runtime life-cycles. If the invocation target is resolved just in time when the call is made, nothing precludes the target component from being reloaded between calls. But with preemptive validation, we get a static dependency tree at runtime, classes wired with each other "too early" and for good, which makes reloading a component very hard (although people keep trying). The reason for "early linking" is also performance, but late binding doesn't mean that the runtime platform can't do any optimization heuristics... but they'll have to be dynamic optimizations in the style of JIT. Will invokedynamic bring the salvation?

It seems when we are talking about multiple components, "statically typed platform" does not quite do the job. Static type check may mean a lot inside a component, but as for inter-component communication they are not only useless, but harmful. People sometimes dismiss dynamic types, because they think "it's like static types, but without static types". What they may not realize is that you are not just loosing, you are gaining something with dynamic types. You get late binding and meta-programming, and in a multi-component environment, it means a whole lot!

And that's when we are talking "inside the platform" components developed in the same language. Once you work with a system that runs on a different platform or developed in a different language - our type system doesn't normally stretch across the communication boundary. The other system may not even have static types, and since we are only as strong as the weakest link, our static types don't really help us. I think every time we try to encode types into communication between systems we end up with a monster like CORBA or Web Services. But there's another (unfortunately popular) extreme of just sending a string over and hoping for the best - with no checking on our side at all. Then we are relying on the other system to stop us from doing damage, and there's no way to correctly blame the component that made an error - was it a wrong string or an unexpected change on the other side? I think that ideally type or contract checking and conversions can be done dynamically on both sides, and not as part of the protocol. This results in light and flexible data-exchanging protocols (like HTTP or ATOM) which are easier to work with and I think will win in the end. On the more theoretical level I like this model for intercommunication and of course there are Aliens, that model external system as a special object in our system.

So as far as I see - components simply require a dynamic environment, they may be statically checked inside, but act as "dynamic" to the outside world. Sort of hard skeleton and soft shell. Indeed soft parts are much easier to fit together and less breakable, due to flexibility - this is used often in mechanical engineering and in nature, so why not in software?

Static type check validates our software component against other components in the compilation environment. Does it match the runtime environment? What about different configurations of the runtime environment - there are tests and real deployments, and various types of deployments, and any given installation environment can change over time - new components being added, other updated or removed? How do we guarantee that compile time checks still hold? The short answer is - we can't. We need dynamic type safety anyway. Now let's examine the added value and the price (yes, there is one!) of deeply static types.

On code organization level, we try to reduce the dependencies to bare minimum - hide classes behind interfaces that we hope will remain more stable. The problem is that number of interfaces and factories in our application grows, while pursuing modularity we sacrifice simplicity... So maybe the problem is in the name? Some go as far as add support for structural types, minimizing dependency to a single field/method signature (not a problem-free solution, but there are interesting refinements). All this may help, but doesn't really solve the problem.

Another aspect we need to deal with is building and packaging the software. Here we enter the world of dependency management, the world of "make", Ant, Maven, repositories, jar versions; if it's a large enough and complex enough software we work on, simply speaking - we enter the world of pain. I still find it strange that we haven't found a better way.

As for application deployment and its problems, we'll get back to it later. But the truth is that no matter how hard we try, we can't guarantee there will be no errors when we deploy our software, so ... JVM doesn't trust us and gives us verification.

Simply put, when class is compiled, some of its requirements from other classes are captured and encoded into the bytecode. Then JVM would check them when the class is loaded, and reject the class if they can't be met. (This is really an over-simplified description of a complex algorithm, which also takes time to execute, despite optimization efforts on JVM side.) So this isn't really a dynamic check, it's something in between - names in our class get linked when it is loaded. In the classic Java SE class-loading scheme, where components are basically a chain, this scheme should work. But if we want real components, ones we can add, override, replace or remove while program is running - sweet turns sour. Our interfaces and factories have names, and classes that represent them need to reside in some "common vocabulary" usually loaded by the parent classloader, because it's not only the class bytecode that matters, but also who loaded what. Since we are talking actual classes, not their names, once we loaded two components, they cannot change their protocol of communication without reloading their parent, they also can't use a different version of a sub-component that the parent component has referenced.

In JEE that sort of things is necessary, that's why classloading in JEE is a terrible mess, not only it does not follow any specification, but it is different in almost each and every app server (wasn't there supposed to be portability?!) If you ever used commons-logging in a JEE app, you probably know what I mean. Maybe it got fixed lately, I don't know, but the Tech Guide for commons-logging is an ode to classloader frustration.

Back to deployment: whenever there are some sort of dynamic components - JEE, Spring or OSGi, there is always reflection. And most of the time there's lots of XML too. It's an escape route from static types. I attended Alef Arendsen's session at JavaEdge that presented OSGi and SpringSource. I carefully watched Alef juggle between XML, source and console like a child who watches a circus magician trying to uncover his tricks. But I didn't quite figure out the magic. And that was a whole session just for HelloWorld. I know Spring folks are doing best they can, and they're smart and all... but comparing to Smalltalk, I wasn't quite impressed. As for other solutions, although I haven't tried this out, there's Guice/OSGi integration without XML and with dynamic proxies and on-the-fly bytecode generation with ASM, but there's some overhead for the user, because it requires intermediate objects for services. So this way or the other, looks like JVM platform is holding us back.

Verification is addition, not replacement of dynamic checks. So what we get is basically a triple check of correctness (javac, verifier, dynamic) but loss of flexibility - we are interfering with components runtime life-cycles. If the invocation target is resolved just in time when the call is made, nothing precludes the target component from being reloaded between calls. But with preemptive validation, we get a static dependency tree at runtime, classes wired with each other "too early" and for good, which makes reloading a component very hard (although people keep trying). The reason for "early linking" is also performance, but late binding doesn't mean that the runtime platform can't do any optimization heuristics... but they'll have to be dynamic optimizations in the style of JIT. Will invokedynamic bring the salvation?

It seems when we are talking about multiple components, "statically typed platform" does not quite do the job. Static type check may mean a lot inside a component, but as for inter-component communication they are not only useless, but harmful. People sometimes dismiss dynamic types, because they think "it's like static types, but without static types". What they may not realize is that you are not just loosing, you are gaining something with dynamic types. You get late binding and meta-programming, and in a multi-component environment, it means a whole lot!

And that's when we are talking "inside the platform" components developed in the same language. Once you work with a system that runs on a different platform or developed in a different language - our type system doesn't normally stretch across the communication boundary. The other system may not even have static types, and since we are only as strong as the weakest link, our static types don't really help us. I think every time we try to encode types into communication between systems we end up with a monster like CORBA or Web Services. But there's another (unfortunately popular) extreme of just sending a string over and hoping for the best - with no checking on our side at all. Then we are relying on the other system to stop us from doing damage, and there's no way to correctly blame the component that made an error - was it a wrong string or an unexpected change on the other side? I think that ideally type or contract checking and conversions can be done dynamically on both sides, and not as part of the protocol. This results in light and flexible data-exchanging protocols (like HTTP or ATOM) which are easier to work with and I think will win in the end. On the more theoretical level I like this model for intercommunication and of course there are Aliens, that model external system as a special object in our system.

So as far as I see - components simply require a dynamic environment, they may be statically checked inside, but act as "dynamic" to the outside world. Sort of hard skeleton and soft shell. Indeed soft parts are much easier to fit together and less breakable, due to flexibility - this is used often in mechanical engineering and in nature, so why not in software?

Posted by

Yardena

at

7:10 PM

1 comments

![]()

Labels: java, software engineering

Subscribe to:

Posts (Atom)